After we started using SQL 2014 availability groups in the corporate environment we ran into a few isolated situations where the business asked us to turn off synchronous replication between the HA nodes because the performance was unacceptable. The DBA team began to come up with some tests to compare workloads in both asynchronous and synchronous mode so we could try and measure the difference between the two and what penalties are incurred. Some workloads performed within an acceptable margin of difference but with some types of workloads we noticed a penalty of over 40%. When you use synchronous replication to ensure high availability you understand there is a performance hit to ensure maximum up-time but almost doubling run times is unacceptable.

When SQL 2016 was released the marketing tag line that we heard was: "It just runs faster". Microsoft turbo charged availability groups with an improved log transport process and some compression enhancements. Their goal was to have 95% transaction log throughput when comparing a server with one synchronous secondary to a stand alone workload. They had very good results as can be seen in the below chart. The blue line is a standalone system, yellow is SQL 2014 and orange is SQL 2016.

Based on this, we installed SQL 2016 onto our test servers and reran the tests we had designed for SQL 2014 and were surprised that performance didn't improve, in fact in our tests, the performance seemed to degrade in 2016. Lets look at the test setup and then what changed between SQL Server 2104 and SQL Server 2016

The Test:

We wanted to design a test that:

- Would have reproducible results

- Had multiple workload types so we could see if there was a change in asynchronous vs synchronous performance based on workload.

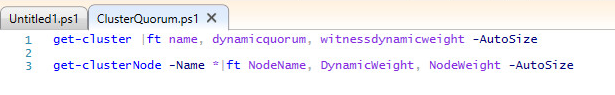

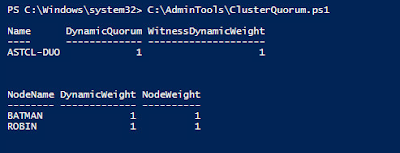

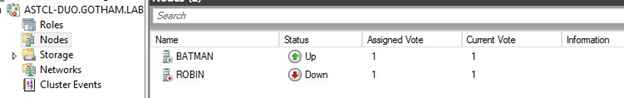

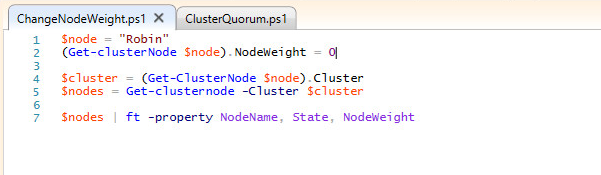

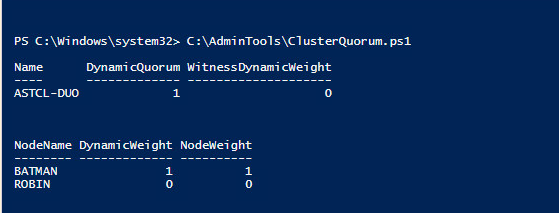

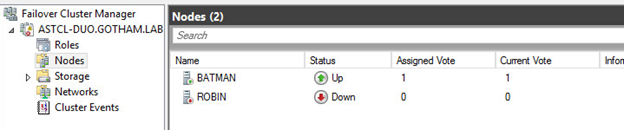

Based on these criteria we decided not to use a 3rd party tool like Hammer DB (the tool MS used for the above graph) but instead design our own scripts. While this method usually leads to very contrived workloads that aren't representative of a production load, we didn't see that as an issue. We were just trying to test performance and not benchmark anything. After some initial testing where we noticed that performance changed based on the number of inserts and rows inserted (1 insert of 10000 rows versus 10000 inserts of 1 row) we decided on a testing methodology using only inserts. I setup a server with two databases and two availability groups, one asynchronous and one synchronous. I then created three tables in each database: a source table with over one million rows, an empty destination table and a tracer table to record the results. Then I created a job to insert a specific number of inserts a specific number of times. See the chart below for how the workload changes in each run.

| Num of Inserts | Rows Per Insert |

|---|---|

| 1 | 1000000 |

| 10 | 100000 |

| 100 | 10000 |

| 1000 | 1000 |

| 10000 | 100 |

| 100000 | 10 |

| 1000000 | 1 |

I ran the test through 100 loops for each number-of-inserts/rows-per-insert combination. For each loop I would record the start time and end time and log it to a table. Then once I had finished all the tests, I could pull data out to compare the results.

The Results:

Results in 2014:

| Inserts | Rows Per Insert | Async ms | Sync ms | Penalty ms | Pen ms/ins | Penalty % | Async Ins/s | Syn ins/s | Penalty ins/s |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 1000000 | 7560 | 8204 | 645 | 644.85 | 9% | 0.13 | 0.12 | 0.01 |

| 10 | 100000 | 7543 | 10668 | 3125 | 312.51 | 41% | 1.33 | 0.94 | 0.39 |

| 100 | 10000 | 8537 | 10006 | 1469 | 14.69 | 17% | 11.71 | 9.99 | 1.72 |

| 1000 | 1000 | 10647 | 15097 | 4450 | 4.45 | 42% | 93.93 | 66.24 | 27.69 |

| 10000 | 100 | 30625 | 39787 | 9162 | 0.92 | 30% | 326.53 | 251.34 | 75.19 |

| 100000 | 10 | 247095 | 327879 | 80784 | 0.81 | 33% | 404.70 | 304.99 | 99.71 |

| 1000000 | 1 | 2048431 | 2892779 | 844348 | 0.84 | 41% | 488.18 | 345.69 | 142.49 |

You can see that you end up with a larger penalty per insert if you do a high number of singleton inserts as opposed to doing one insert of a high number of rows.

We ran the same tests using the same setup in SQL 2016.

Results in 2016:

| Inserts | Rows Per Insert | Async ms | Sync ms | Penalty ms | Pen ms/ins | Penalty % | Async Ins/s | Syn ins/s | Penalty ins/s |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 1000000 | 7279 | 14189 | 6910 | 6910.25 | 95% | 0.14 | 0.07 | 0.07 |

| 10 | 100000 | 7029 | 15467 | 8438 | 843.79 | 120% | 1.42 | 0.65 | 0.78 |

| 100 | 10000 | 8114 | 16736 | 8623 | 86.23 | 106% | 12.32 | 5.98 | 6.35 |

| 1000 | 1000 | 10658 | 22539 | 11881 | 11.88 | 111% | 93.83 | 44.37 | 49.46 |

| 10000 | 100 | 29862 | 52750 | 22888 | 2.29 | 77% | 334.87 | 189.57 | 145.30 |

| 100000 | 10 | 242869 | 398737 | 155868 | 1.56 | 64% | 411.74 | 250.79 | 160.95 |

| 1000000 | 1 | 2129939 | 3056955 | 927016 | 0.93 | 44% | 469.50 | 327.12 | 142.37 |

Notice the Sync MS column in the second chart. The Synchronous times were much higher than in 2014 and in some cases the run times more than doubled when switching between the asynchronous and synchronous availability groups. I was very surprised that the new version of SQL that "just runs faster" was seemingly running slower under synchronous commit. I decided to open a case with Microsoft and see if they could explain why there was apparent performance degradation in SQL2016.

The Answer

After working with one Microsoft support tech for many months and not getting any answers, I was escalated to another engineer who was very helpful and gave me the answer on our first call. The difference comes down to what was changed between SQL Server 2014 and SQL server 2016. One of the things that was changed was how log stream compression was handled by default for synchronous AG setups. In SQL 2014, log stream compression was turned on by default for both asynchronous and synchronous setups. In SQL 2016, log stream compression was left on for asychronous availability groups but turned off for synchronous ones. You can enable log stream compression for synchronous availability groups using trace flag 9592. See these two links for information from microsoft:

- https://docs.microsoft.com/en-us/sql/t-sql/database-console-commands/dbcc-traceon-trace-flags-transact-sql

- https://blogs.technet.microsoft.com/simgreci/2016/06/17/sqlserver-2016-alwayson-and-new-log-transport-behavior/

Once I enabled the trace flag in my 2016 instance and reran my tests the results in 2016 began to look like I had originally expected them to

Results in 2016 with log stream compression:

Inserts

|

Rows Per Insert

|

Async ms

|

Sync ms

|

Penalty ms

|

Pen ms/ins

|

Penalty %

|

Async Ins/s

|

Syn ins/s

|

Penalty ins/s

|

|---|---|---|---|---|---|---|---|---|---|

| 1 | 1000000 | 8560 | 8131 | -429 | -429 | -5% | 0.12 | 0.12 | -0.01 |

| 10 | 100000 | 8657 | 8363 | -294 | -29 | -3% | 1.16 | 1.20 | -0.04 |

| 100 | 10000 | 9894 | 10279 | 384 | 4 | 4% | 10.11 | 9.73 | 0.38 |

| 1000 | 1000 | 13148 | 16928 | 3780 | 4 | 29% | 76.06 | 59.07 | 16.98 |

| 10000 | 100 | 32103 | 44546 | 12443 | 1 | 39% | 311.50 | 224.49 | 87.01 |

| 100000 | 10 | 273164 | 348095 | 74932 | 1 | 27% | 366.08 | 287.28 | 78.80 |

| 1000000 | 1 | 2292889 | 3096621 | 803732 | 1 | 35% | 436.13 | 322.93 | 113.20 |

As you can see the synchronous vs asynchronus times have less of a difference and the penalties per insert per second are better now overall than they were in SQL 2014.

The Conclusion

Know your workload and know what changed. Do I recommend turning on trace flag 9592 on every server? Of course not.

Almost every time you ask a performance question to a SQL server professional you will get the same answer: "It depends". I may seem like a cop-out but it is very true. There are a lot of factors that go into performance and one of the key ones is workload. Is your workload true OLTP with a mix of reads and inserts/updates/deletes or is it a pure read workload like a AG read only replica or is it a datawarehouse where you get heavy inserts during the night and mostly reads during business hours? Knowing what type of workload you have helps you answer the question of should you use a trace flag or not. In my test case, I was doing pure inserts of char data which benefitted greatly from having compression turned on. Your workload may be such that you see a performance degradation by using the trace flag.

When you upgrade or migrate a database from one version to the next, you need to understand what changes were made under the hood that could impact you. From a production DBA side, you should understand what changes are being made to the features that you use in your environment (like availability groups). If you are on the application DBA side of things you should understand what changes are being made to the query engine. You should know about the changes that have been made to the query optimizer in the last few releases, how those changes can affect your code and how to turn them off or on. In SQL 2016, Microsoft took some features that were accessible through trace flags (like -T1117 and -T1118) and made them default. By understanding what the changes are and how they will affect you, you will be better equipped to resolve an issue when it pops up and hopefully you won't spend months looking at something that could have been fixed with a simple start up trace flag.